ETL Testing Tools and Techniques: A Practical Guide

ETL testing ensures that data transferred from a source to its destination after business transformation is both accurate and consistent. This process involves verifying data at multiple stages between the source and destination to ensure reliability. ETL stands for Extract, Transform, and Load, representing the three key phases of this data migration process.

Ensuring Data Integrity with Data Warehouse Testing

Data Warehouse Testing is a crucial process that evaluates the integrity, accuracy, reliability, and consistency of the data stored within a data warehouse. This testing ensures that the data aligns with the organization’s data framework and is dependable for making critical business decisions. The primary goal is to guarantee that the integrated data is accurate and trustworthy, providing a solid foundation for informed decision-making.

Understanding ETL: The Key to Streamlining Data Integration

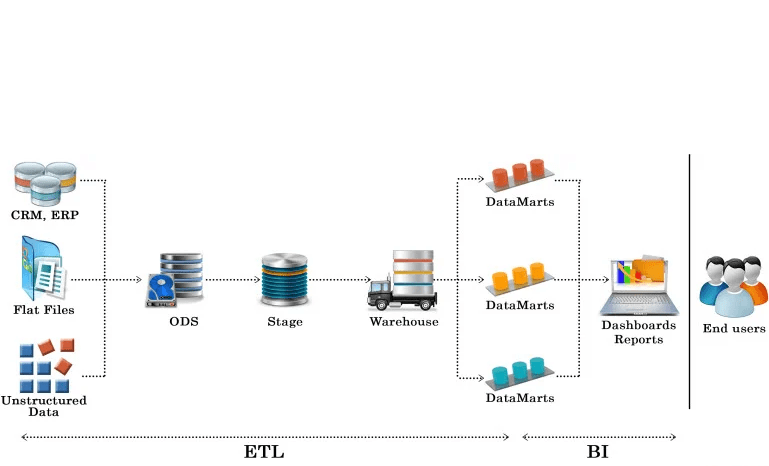

ETL, which stands for Extract-Transform-Load, is a process that defines how data moves from a source system to a data warehouse. During ETL, data is extracted from an operational database, transformed to align with the structure of the data warehouse, and then loaded into the warehouse for analysis. Beyond databases, ETL processes can also incorporate data from various sources like text files, legacy systems, and spreadsheets.

How Does ETL Work?

Imagine a retail store with multiple departments—sales, marketing, and logistics—each managing customer data in their own way. The sales team may organize data by customer name, while the marketing team might categorize it by customer ID. If they want to analyze a customer’s purchase history to evaluate the effectiveness of different marketing campaigns, it becomes a challenging and time-consuming task due to the varying data formats.

A data warehouse, with the help of ETL, solves this issue by consolidating data from different sources into a uniform structure. ETL transforms inconsistent data sets into a standardized format, making it easier to analyze. With BI tools, this unified data can then be used to generate valuable insights and reports.

The diagram below provides an overview of the ETL testing process flow and highlights important ETL testing concepts for a clearer understanding of the entire process.

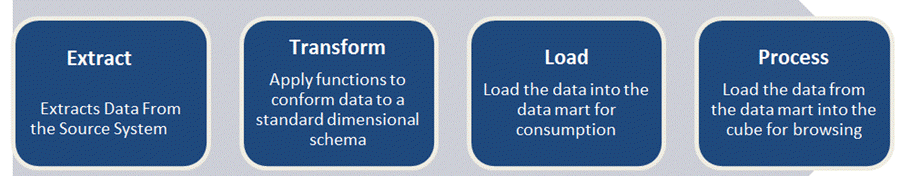

The ETL Process:

Extract:

Extract data from relevant sources.Transform:

Convert data into a format suitable for the data warehouse.- Building Keys: Unique identifiers for entities, including primary, alternate, foreign, composite, and surrogate keys, which are controlled by the data warehouse.

- Data Cleansing: This step corrects errors, removes inconsistencies, and ensures data is accurate. Conforming ensures that incompatible data is resolved for enterprise use, while metadata is created to monitor data quality.

Load:

Load the cleaned and transformed data into the data warehouse.- Building Aggregates: Summarize data from the fact table to enhance query performance for end-users.

This structured ETL process allows businesses to efficiently manage large, diverse data sets and derive meaningful insights.

The ETL Testing Lifecycle

Just like other testing processes, ETL testing follows a structured sequence of phases. Each step in the ETL testing lifecycle ensures accurate and reliable data transformation.

The ETL testing process consists of five key stages:

- Identify data sources and define requirements

- Acquire and extract data

- Implement business logic and dimensional modeling

- Populate and build the data warehouse

- Generate and review reports

Different Types of ETL Testing and Their Processes

Different Methods of ETL Testing

Testing Workflow

Production Validation Testing

Table balancing or “production reconciliation” refers to the ETL testing conducted on data as it is transferred into production environments. To ensure accurate business decisions, the data in production systems must be properly organized. Informatica’s Data Validation Option automates and manages ETL testing, ensuring that production data remains reliable and uncompromised.

Source to Target Validation: Ensuring Accurate Data Transformation

Source to Target validation testing ensures that transformed data matches the expected outcomes. Commonly known as “table balancing” or “production reconciliation,” this type of ETL testing is performed as data transitions into production systems. To make informed business decisions, it is crucial that the data within these systems is accurate and reliable. Informatica’s Data Validation Option offers automated ETL testing and management solutions, safeguarding your production systems by ensuring the integrity of your data.

Streamlining Application Upgrades with Automated ETL Testing

Automated ETL testing significantly reduces test development time by generating tests automatically. This process ensures that data extracted from an older application or repository matches precisely with the data in a new repository or application, ensuring seamless data migration during upgrades.

Comprehensive Metadata Validation

Metadata validation ensures the accuracy of data by verifying key elements such as data types, data lengths, and the proper implementation of indexes and constraints. This process is essential for maintaining the integrity and consistency of your database structure.

Ensuring Data Integrity: Comprehensive Completeness Testing

Data completeness testing is essential for ensuring that all expected data is accurately transferred from the source to the target system. This process involves various checks to validate that the data is fully and correctly loaded. Key tests include comparing and validating counts, aggregates, and actual data between the source and target systems, especially for columns with straightforward or no transformations. This helps ensure that data integrity is maintained throughout the migration process.

Data Accuracy Verification

This testing ensures that data is correctly loaded and transformed according to the expected parameters, verifying the precision of data handling throughout the process.

Data Transformation Validation

Data transformation testing involves more than a simple SQL query comparison. It requires executing multiple SQL queries for each row to validate that transformation rules are correctly applied and that data is accurately converted.

Data Quality Assessment

Data quality testing encompasses various checks to ensure data integrity. This includes syntax tests to identify errors like invalid characters or improper casing, and reference tests to validate data against the data model, such as verifying Customer IDs. It also involves number checks, date checks, precision checks, and null checks to ensure overall data quality.

Incremental ETL Validation

This testing focuses on maintaining data integrity by verifying that both existing and newly added data are processed correctly during incremental ETL operations. It ensures that updates and inserts are handled as expected in ongoing data integrations.

User Interface and Navigation Testing

This testing examines the functionality and usability of the user interface and navigation elements within front-end reports, ensuring a smooth and intuitive user experience.

Crafting Effective ETL Test Cases: A Comprehensive Guide

ETL (Extract, Transform, Load) testing plays a crucial role in ensuring data integrity as it moves from source to destination through various transformations. The goal of ETL testing is to verify that the data is accurately loaded and transformed throughout the process. This includes validating the data at multiple stages between the source and destination.

When conducting ETL testing, two essential documents are crucial for the tester:

ETL Mapping Sheets: These sheets detail the source and destination tables, including every column and its reference in lookup tables. Proficiency in SQL is important for ETL testers, as testing often involves crafting complex queries with multiple joins to validate data at various stages. ETL mapping sheets are invaluable for creating and executing these queries effectively.

Database Schema of Source and Target: Having access to the database schema is vital for cross-referencing details in the mapping sheets and ensuring accuracy in data verification.

These tools and practices are fundamental for creating robust ETL test cases and ensuring the reliability of data management processes.

ETL Test Scenarios and Test Cases

Test Scenario

Test Cases

Validation of Mapping Documents for Test Scenarios

Ensure that the mapping documents contain the necessary ETL information. Each mapping document should include a change log to track updates and modifications.

Data Validation Best Practices

- Ensure the structure of source and target tables aligns with the mapping document.

- Confirm that data types in both source and target tables match.

- Verify that the length of data types is consistent between the source and target.

- Check that data field types and formats are correctly defined.

- The length of source data types should not be shorter than the length of target data types.

- Cross-check column names in the tables against the mapping document to ensure accuracy.

Constraint Validation

Ensure that constraints are accurately defined and implemented for each specific table.

Data Consistency Challenges

- Data types and lengths for attributes may differ between files or tables, despite having the same semantic meaning.

- Improper application of integrity constraints.

Completeness Checks

- Verify that all expected data is successfully loaded into the target table.

- Compare record counts between the source and target tables.

- Identify and address any rejected records.

- Ensure data is not truncated in the target table columns.

- Conduct boundary value analysis.

- Compare unique values of key fields between the data loaded into the data warehouse and the source data.

Accuracy Issues

- Incorrect or misspelled data entries

- Data with null values, non-unique entries, or values outside the expected range

Data Transformation

Implement necessary transformations to standardize and adjust data as required

Data Quality Assurance

- Number Validation: Ensure all numerical data is accurate and properly formatted

- Date Validation: Standardize date formats and ensure consistency across all records

- Precision Verification: Confirm the precision of numeric fields

- Data Integrity Check: Validate the accuracy and consistency of the data

- Null Value Check: Identify and handle null values appropriately

Null Value Verification

Confirm that columns designated as “Not Null” do not contain any null values

Duplicate Validation

- Validate uniqueness of key columns (unique keys, primary keys) as per business requirements

- Check for duplicate values in columns derived from multiple sources combined into one

- Ensure no duplicates exist in combinations of multiple columns within the target data

Date Validation

- Determine the creation date of each record

- Identify active records based on ETL development criteria

- Identify active records according to business requirements

- Monitor how date values affect updates and inserts

Comprehensive Data Validation

- Validate the entire dataset between source and target tables using difference queries

- Perform source-minus-target and target-minus-source comparisons

- Investigate any mismatching rows identified by difference queries

- Use intersect statements to verify matching rows between source and target

- Ensure intersect counts match individual counts of source and target tables

- Address any discrepancies if intersect counts are less than the total counts of source or target tables, indicating potential duplicates

Data Cleanliness

- Remove unnecessary columns before

- loading data into the staging area

Types of ETL Bugs

Type of Bugs

Description

User interface bugs/cosmetic bugs

- Related to GUI of application

- Font style, font size, colors, alignment, spelling mistakes, navigation and so on

Boundary Value Analysis (BVA) related bug

- Minimum and maximum values

Equivalence Class Partitioning (ECP) related bug

- Valid and invalid type

Input/Output bugs

- Valid values not accepted

- Invalid values accepted

Calculation bugs

- Mathematical errors

- Final output is wrong

Load Condition bugs

- Does not allows multiple users

- Does not allows customer expected load

Race Condition bugs

- System crash & hang

- System cannot run client platforms

Version control bugs

- No logo matching

- No version information available

- This occurs usually in Regression Testing

H/W bugs

- Device is not responding to the application

Help Source bugs

- Mistakes in help documents

Distinguishing Between Database Testing and ETL Testing

ETL Testing

Data Base Testing

Verifies whether data is moved as expected

The primary goal is to check if the data is following the rules/ standards defined in the Data Model

Verifies whether counts in the source and target are matching

Verify that there are no orphan records and foreign-primary key relations are maintained

Verifies that the foreign primary key relations are preserved during the ETL

Verifies that there are no redundant tables and database is optimally normalized

Verifies for duplication in loaded data

Verify if data is missing in columns where required

Key Responsibilities of an ETL TesterKey Responsibilities of an ETL Tester

As an ETL (Extract, Transform, Load) tester, your role involves a variety of critical tasks to ensure data integrity and process efficiency. These responsibilities can be broadly categorized into three main areas:

- Stage Table Handling (SFS or MFS)

- Application of Business Transformation Logic

- Loading Target Tables from Stage Files or Tables Post-Transformation

Core Duties of an ETL Tester

- ETL Software Testing: Verify the functionality and performance of ETL tools and software.

- Component Testing: Evaluate individual components within the ETL data warehouse to ensure correct operation.

- Backend Data-Driven Testing: Conduct tests on backend processes driven by data inputs.

- Test Case Development: Design, create, and execute comprehensive test cases, plans, and test harnesses.

- Issue Identification and Resolution: Detect potential issues and provide solutions to resolve them.

- Requirements and Design Approval: Review and approve requirements and design specifications.

- Data Transfer Verification: Test data transfers and flat files to confirm accuracy and completeness.

- SQL Query Writing: Develop and execute SQL queries for various testing scenarios, such as count tests.

Optimizing ETL Performance: A Comprehensive Testing Approach

Optimizing ETL (Extract, Transform, Load) systems is crucial to ensuring they can manage heavy loads and multiple user transactions efficiently. ETL Performance Testing focuses on evaluating and enhancing the performance of these systems. The main objective is to identify and address any performance bottlenecks that may hinder the efficiency of data processing. This involves scrutinizing various components, including source and target databases, data mappings, and session processes, to ensure smooth and optimal operation. By eliminating performance constraints, ETL Performance Testing helps achieve a more responsive and effective data integration environment.

Transforming ETL Testing Through Automation

Traditionally, ETL testing involves manual SQL scripting or visually inspecting data, both of which are labor-intensive, prone to errors, and often fall short of comprehensive coverage. To enhance efficiency, ensure thorough testing, and cut down on costs, automation has become essential in both production and development environments. Automation not only accelerates the process but also boosts defect detection rates. Tools like Informatica are leading the way in revolutionizing ETL testing through automation.

Essential Guidelines for Effective ETL Testing

- Verify Accurate Data Transformation: Ensure that data is transformed correctly throughout the ETL process.

- Guarantee Data Integrity: Confirm that projected data is loaded into the data warehouse without any loss or truncation.

- Handle Invalid Data: Make sure the ETL application properly rejects invalid data, replaces it with default values, and reports issues.

- Assess Performance and Scalability: Ensure that data is loaded into the data warehouse within the expected time frames to confirm scalability and performance.

- Implement Comprehensive Unit Testing: All methods should be covered by unit tests, regardless of their visibility.

- Utilize Effective Coverage Techniques: Measure the effectiveness of unit tests using appropriate coverage techniques.

- Maintain Test Precision: Aim for a single assertion per test case to ensure clarity and precision.

- Focus on Exception Handling: Develop unit tests specifically targeting exceptions to improve robustness.

Leave a Reply